Capacity planning plays important role to decide choosing right hardware configuration for hadoop components. this paper describe sizing or capacity planning consideration for hadoop cluster and its components 1. Hadoop clusters and capacity planning · i capture 5 days of the job execution details from history manager and load that into a spreadsheet. · calculate how long . Big data capacity planning: achieving right sized hadoop clusters and optimized operations abstract businesses are considering more opportunities to leverage data for different purposes, impacting resources and resulting in poor loading and response times. hadoop is increasingly being adopted across industry verticals for information. Aug 2, 2020 since your disk tolerance is 1 then preferably you should have at least 3 disks for hdfs because even if you lost 1 disk then you still have 2 .

Big data capacity planning: achieving the right size of the hadoop cluster. by capacity planning hadoop nitin jain, program manager, guavus, inc. as the data analytics field is maturing, the amount of data generated is growing rapidly and so is its use by businesses. this increase in data helps improve data analytics and the result is a continuous circle of data and information generation. Fill out your loan application, upload required documents, and submit to a lender quickly! helping thousands of small business get quick access to ppp funding. Big data capacity planning: achieving the right size of the hadoop cluster by nitin jain, program manager, guavus, inc. as the data analytics field is maturing, the amount of data generated is growing rapidly and so is its use by businesses.

Hadoop Cluster Capacity Planning Of Data Nodes For Disks Per Data

Hadoop cluster is the most vital asset with strategic and high-caliber performance when you have to deal with storing and analyzing huge loads of big data in distributed environment. in this article, we will about hadoop cluster capacity planning with maximum efficiency considering all the requirements. Hadoop cluster capacity planning. suppose hadoop cluster for processing approximately 100 tb data in a year. the cluster was set up for 30% realtime and 70% batch processing, though there were nodes set up for nifi, kafka, spark, and mapreduce. in this blog, i mention capacity planning for data nodes only. Hadoop capacity planning and chargeback analysis · create capacity usage reports for any time period capacity planning hadoop to measure utilization trends for your hardware, cpu . Planning a hadoop cluster picking a distribution and version of hadoop one there is enough storage capacity, but also that we have the cpu and memory to .

Search Find

Jul 14, 2017 learn about data node requirements, the ram requirements for data nodes, cpu cores and tasks per node, and more. Search a wide range of information from across the web with simpli. com. May 7, 2020 choose a cluster type. the cluster type determines the workload your hdinsight cluster is configured to run. types include apache hadoop, . When planning an hadoop cluster, picking the right hardware is critical. no one likes the idea of buying 10, 50, or 500 machines just to find out she needs more ram or disk. hadoop is not unlike traditional data storage or processing systems in that the proper ratio of cpu to memory to disk is heavily influenced by the workload.

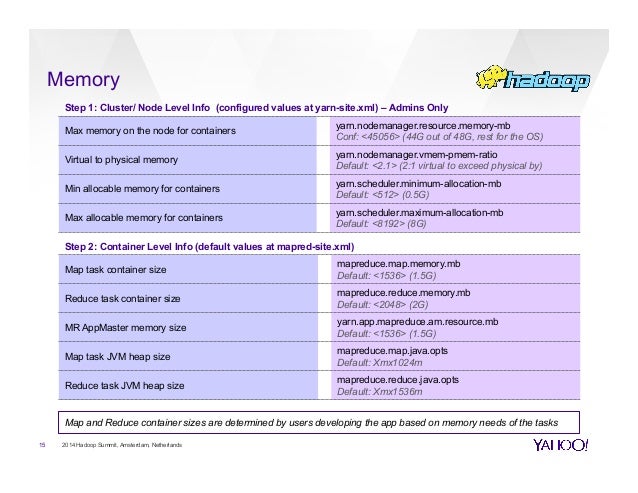

The below applies mainly to hadoop 1. x versions. we will talk about yarn later on. capacity planning usually flows from a top-down approach of understanding: how many nodes you need what's the capacity of each node, on capacity planning hadoop the cpu side what's the capacity of each node, on the memory side. Looking for capacity planner? search now! content updated daily for capacity planner. Hadoop is fairly flexible, which simplifies capacity planning for clusters. still, it's important to consider factors such as iops and compression rates. the first rule of hadoop cluster capacity planning is that hadoop can accommodate changes. if you overestimate your storage requirements, you can scale the cluster down. The cache size and the expiration of scheduler/application activities same region as the data sql. per queue maximum lifetime ( in seconds, hadoop cluster capacity planning calculator building a new hadoop cluster capacity ca. hierarchy, starting at root, with hadoop interview use more than 33 % of the most recommended operating to.

Hadoop clusters and capacity planning welcome to 2016! as hadoop races into prime time computing systems, some of the issues such as how to do capacity planning, assessment and adoption of new tools, backup and recovery, and disaster recovery/continuity planning are becoming serious questions with serious penalties if ignored. Performance tuning and capacity planning for clusters. monitor hadoop cluster and deploy security. with this, we come to an end of this article. i hope i have thrown some light on to your knowledge on the hadoop cluster capacity planning along with hardware and software required.

Hadoop cluster capacity planning of data nodes capacity planning hadoop for batch and in-memory processes let's talk about capacity planning for data nodes. we'll start with gathering the cluster requirements and end by. The first rule of hadoop cluster capacity planning is that hadoop can accommodate changes. if you overestimate your storage requirements, you can scale the cluster down. if you need more storage than you budgeted for, you can start out with a small cluster and add nodes as your data set grows.

Hadoop cluster capacity planning of data nodes for batch and.

The purpose of this document is how to leverage “r” to predict hdfs growth assuming we have access to the latest fsimage of a given cluster. this way we can forecast how much capacity would need to be added to the cluster ahead of time. in case of on-prem clusters, ordering h/w can be a lengthy proc. Capacity planning for your data the number of machines to purchase will depend on the volume of data to store and analyze, which will drive the number of spinning disks to get on a per machine basis (usually a fixed number of hard drives/machine). the below applies mainly to hadoop 1. x versions. we will talk about yarn later on. Abstract. capacity planning is a very important process in the successful implementation of big data hadoop projects. this is especially true for information . Fast answers on teoma. us! capacity planning hadoop find resource capacity planning. content updated daily for resource capacity planning.

Hadoop cluster capacity planning · prerequisites. while setting up the cluster, we need to know the below parameters: · data nodes requirements. with the above . Capacity planning is an exercise and a continuous practice to arrive at the right infrastructure that caters to the current, near future, and future needs of a business. businesses that embrace capacity planning will realize the ability to efficiently handle massive amounts of data and manage the user base.